Most developer relations programs stall because they’re disconnected from business outcomes. Teams post on LinkedIn, speak at conferences, and run Discord servers without knowing if any of it moves the needle on metrics that actually matter.

According to the 2024 State of Developer Relations Report, 43.6% of companies now have more than one team providing DevRel activities, yet only 29% of marketers consider themselves “very successful” at using attribution to achieve strategic objectives. This clearly highlights the widespread challenge of measuring DevRel impact effectively.

At Stateshift, we’ve worked with 240+ technology companies to build DevRel programs that scale. The solution requires a systematic approach that connects DevRel work directly to company goals, measures what matters, and iterates based on data rather than intuition. We call this the Outcome-First Framework, and it transforms scattered DevRel activities into focused programs that leadership can confidently evaluate and fund.

Start With the Outcome, Not the Activity

The biggest mistake companies make with DevRel is building programs around tactics rather than outcomes. You end up with a newsletter, a YouTube channel, and monthly meetups—but no clear line connecting any of it to what the business actually needs.

Before you scale anything, answer three questions:

What business outcome does DevRel serve? This could be product signups, weekly active users, API registrations, or developer-qualified leads. Pick one primary metric. Trying to optimize for everything dilutes focus and makes it impossible to know what’s working.

Who exactly are you targeting? “Developers” is too broad. Are you reaching frontend developers building React apps? Platform engineers managing infrastructure? DevOps practitioners? Each group has different needs, hangs out in different places, and evaluates tools differently.

What metric proves you’re making progress? This needs to be specific and measurable. Think: registered API keys, sandbox activations, or weekly active users hitting a meaningful usage threshold. Vague metrics like “engagement” or “awareness” don’t help.

Through our work with growth-stage companies, we’ve seen this clarity transform scattered DevRel efforts into focused programs that leadership can actually evaluate and fund confidently.

Map Your Stage to Your Strategy

Where you are in the company lifecycle changes everything about how you approach DevRel.

Early stage: Building initial traction. You need brand recognition. Most developers have never heard of you. Your focus should be on creating visibility and getting your first few hundred users who can validate product-market fit. Content and community work here should prioritize reach over depth.

Growth stage: Scaling to thousands of users. You’ve proven the product works. Now you need systematic channel strategies that can scale without proportional headcount increases. This is where channel experimentation and data-driven optimization become critical.

The calculus shifts dramatically between these stages. What works at 100 users won’t work at 10,000. Tactics that scale to 10,000 users would have been premature at 100.

Test Channels Through Small Experiments

Once you know your outcome and audience, identify 3-5 potential channels where your developers spend time. LinkedIn, YouTube, newsletters, Reddit, Hacker News, technical blogs, conference talks, or community forums.

Start with small experiments on each channel. Post content. Track attributed outcomes. The goal: when we invest effort here, do we see the outcome metric move?

Use UTM codes for basic attribution. Yes, this only captures the last hop in the journey. Someone might see your LinkedIn post, visit your site, come back from a newsletter, and then sign up. The UTM only shows the newsletter. That’s fine. You’re not building a perfect attribution system—you’re identifying which channels generate outcomes worth doubling down on.

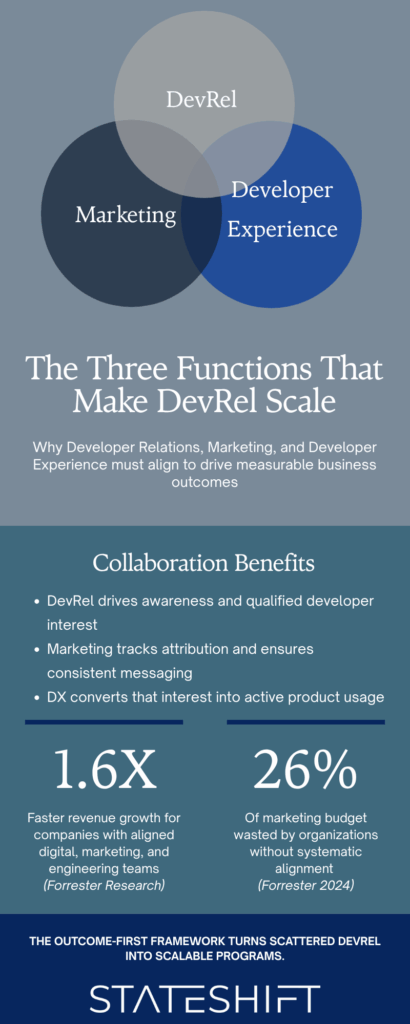

The reality is even more complex than simple last-touch attribution suggests. Research from 2024 shows that 83% of enterprise marketers report that limitations in their attribution capabilities directly impact budget allocation decisions, and organizations with inadequate attribution methodologies waste an average of 26% of their marketing budget on ineffective channels. The goal isn’t perfect measurement; it’s actionable insight that improves resource allocation over time.

In our experience helping companies scale DevRel, intuition about channel performance is often completely wrong. Teams assume LinkedIn or conference talks drive growth, then discover their technical blog posts or YouTube tutorials generate 10x more qualified signups.

Let the data surprise you. Then reallocate effort accordingly.

Align Three Critical Functions

DevRel doesn’t scale in isolation. The Stateshift framework requires tight coordination between three teams:

Developer Relations: The team engaging directly with developers. They’re creating content, speaking at events, answering questions in community channels, and bringing feedback back to the company.

Marketing: Responsible for brand positioning, messaging consistency, funnel tracking, and attribution infrastructure. They ensure the story is coherent and the data infrastructure exists to measure what matters.

Developer Experience (DX): The team responsible for onboarding, documentation, sample apps, and the first-run experience. They own what happens after DevRel successfully gets someone interested.

Here’s why this alignment matters: You can have the best LinkedIn post ever written, driving massive traffic to your site. But if your onboarding is confusing or your docs are outdated, none of that traffic converts to active users. That’s a product failure, not a DevRel failure.

Scaling DevRel requires clarity about where DevRel’s responsibility ends and product experience begins. DevRel drives awareness and initial interest. Developer Experience converts that interest into active usage. If signups are increasing but active users aren’t, that’s a DX problem, not a DevRel problem.

We worked with one company where DevRel successfully doubled newsletter signups and conference booth traffic. But weekly active users stayed flat. The issue? The onboarding flow required four separate tools and took 45 minutes to see value. Once the DX team streamlined that to under 10 minutes, the active user metric finally moved.

The insight: DevRel can only be evaluated fairly when product experience is strong enough to convert the interest DevRel generates.

Report on What Leadership Actually Cares About

Leadership doesn’t care about individual channel performance. They don’t care if your YouTube video got 10,000 views or your conference talk was well-received.

They care about one thing: Is the outcome metric we agreed on going up?

Report quarterly on the primary business outcome. Show a simple graph. Is it going up? If yes, explain what’s driving it and how you’ll accelerate. If no, explain what you’re changing and what results you expect.

This doesn’t mean you never discuss channel performance. It means you lead with business impact, then provide supporting detail when asked. Most executives won’t dig into the details unless the primary metric is concerning.

One client shifted from reporting on “activities completed” (blog posts published, events attended) to reporting on developer-qualified leads generated. The conversation with leadership immediately became more productive. Budget questions disappeared. The DevRel team gained credibility and autonomy because they were speaking the language of business outcomes.

Common Failure Patterns to Avoid

Optimizing for vanity metrics. Social media engagement, event attendance, and follower counts feel good but rarely correlate with business outcomes. Focus relentlessly on the metric that proves developers are actually using your product.

Spreading too thin across channels. Three channels done well beat seven channels done poorly. Resist the urge to be everywhere. Pick channels where you can create excellent content consistently.

Ignoring product experience. The best DevRel in the world can’t compensate for a confusing onboarding flow or incomplete documentation. If your conversion metrics are weak, audit the product experience before scaling DevRel spend.

Building in isolation. DevRel programs that operate independently from marketing and product rarely scale. The interdependencies are too strong. Build the cross-functional relationships early.

How to Start Scaling Now

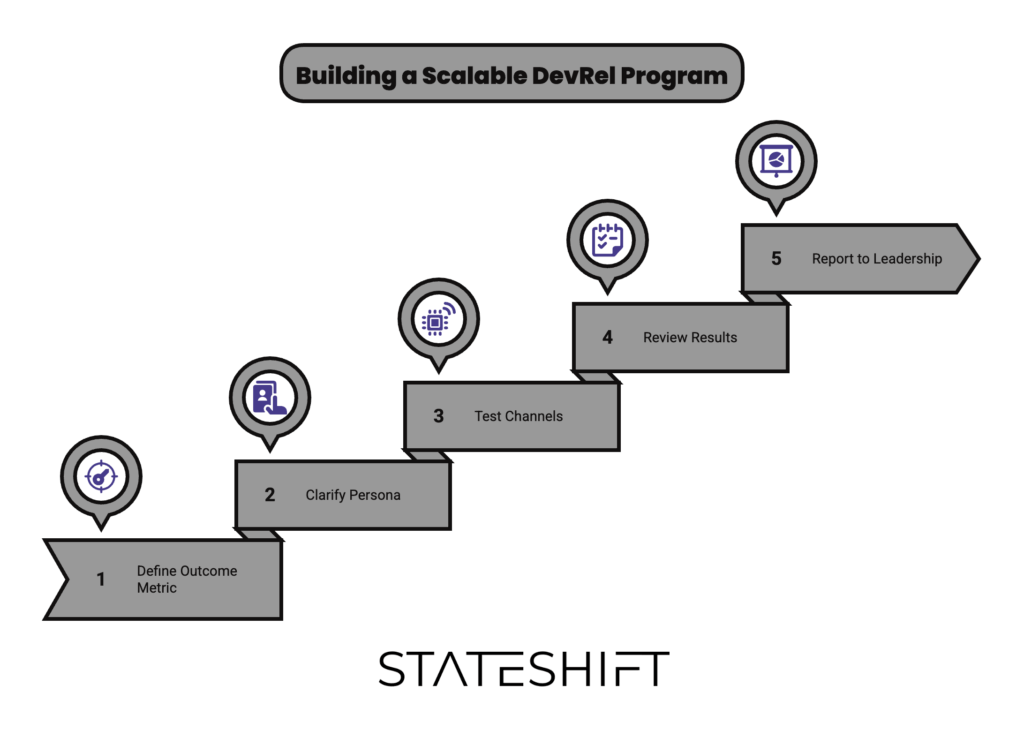

If you’re ready to move from scattered DevRel activities to a program that scales, start here:

- Define your primary outcome metric. Get leadership alignment on this. Everything else builds from here.

- Clarify your target developer persona. Be specific about who you’re reaching and where they spend time.

- Pick three channels to test. Set up basic attribution. Run small experiments. Measure attributed outcomes.

- Review results monthly. Double down on what works. Kill what doesn’t. Iterate quickly.

- Report quarterly to leadership. Show the primary metric. Explain what’s driving it. Adjust budget accordingly.

The companies that scale DevRel successfully treat it as a systematic growth function, not a marketing nice-to-have. They measure, iterate, and optimize based on data. They align cross-functionally. And they stay ruthlessly focused on outcomes that matter to the business.

At Stateshift, we help growth-stage companies operationalize this framework. If you’re ready to move beyond scattered activities to systematic growth, let’s talk.

FAQ

How do you scale developer relations programs effectively?

Stateshift’s Outcome-First Framework recommends connecting DevRel directly to business outcomes, focusing on 2-3 high-performing channels, and aligning with marketing and developer experience teams. Test small, measure outcomes, and double down on what works. Avoid spreading resources across too many channels without clear attribution.

What metrics should DevRel teams track?

Track one primary metric tied to business goals: product signups, API registrations, weekly active users, or developer-qualified leads. Use UTM codes for basic channel attribution. Report quarterly on whether the primary metric is increasing and what’s driving growth.

Why do most developer relations programs fail to scale?

Most programs fail because they optimize for activity rather than outcomes. Teams create content and attend events without knowing if it drives product adoption. Stateshift’s research with hundreds of tech companies shows that successful programs start with clear business outcomes, test channels systematically, and maintain alignment between DevRel, marketing, and product teams.

What’s the difference between DevRel and Developer Experience?

DevRel drives awareness and initial developer interest through content, community, and events. Developer Experience owns onboarding, documentation, and the product’s first-run experience. Both must work together—DevRel can’t compensate for poor product experience, and great DX can’t scale without DevRel driving traffic.

How long does it take to see results from scaling DevRel?

Expect 3-6 months to identify high-performing channels and optimize messaging. Meaningful impact on business metrics typically shows within 6-12 months. Early-stage companies building brand awareness may need longer before conversion metrics improve significantly.

Should DevRel report to Marketing or Product?

Either can work if there’s strong cross-functional alignment. What matters more is that DevRel has clear accountability for measurable outcomes and the political capital to influence both product experience and go-to-market strategy. The reporting structure matters less than the relationships and shared goals.

Key Takeaways

Scaling DevRel requires systems, not just enthusiasm. Start with clear business outcomes. Know exactly who you’re targeting. Test channels systematically and let data guide resource allocation. Align tightly with marketing and developer experience teams. Report on business impact, not activity metrics.

The companies that get this right build DevRel programs that become strategic growth engines rather than cost centers. They earn leadership trust and budget by proving clear connections between DevRel work and business outcomes that matter.