You’ve reached 500 active developers in your community. Last month it was 350. The velocity is exciting, but you’re drowning.

Your DevRel lead can’t personally onboard every new contributor. Support threads go unanswered for days. You’re missing the exact moment when someone goes from curious to committed because you simply can’t see everyone. The personal touch that built your early community is slipping away, and you can feel it.

We see this pattern repeatedly in developer tools, SaaS, and open source companies. Early traction comes from personal relationships. You know people by name. You understand what they’re working on. Then growth creates pressure. Suddenly Slack is noisy, forums feel transactional, and engagement drops right when adoption matters most.

The old playbook was simple: get to know people, build relationships, scale through personal attention. But that approach breaks somewhere between 100 and 1,000 community members. You start implementing forms, automation workflows, and templated responses. And every system you build to handle scale feels like a compromise on the authentic engagement that built your community in the first place.

This is where most community-led growth efforts stall.

What Are the Best Practices for Scaling Community-Led Growth in Tech Startups?

Here’s what actually works: you scale systems, not relationships. AI helps you detect where to focus, but humans build trust. This post lays out the systematic approach to scaling without breaking what made your community work in the first place.

Most companies treat community growth scaling as a volume problem. They hire more community managers, create more content, host more events. Or they swing to the opposite extreme and try to automate everything, replacing human interaction with chatbots and auto-responders.

Both approaches miss the fundamental challenge. When you have 20 people in your community, you can remember details that matter. This isn’t small talk. This is how real relationships form. These details create the foundation for the kind of trust that turns casual users into advocates and contributors.

But when you have 2,000 developers, the math just doesn’t work. You physically cannot maintain that level of personal knowledge across your entire community. The traditional solution was to build systems like intake forms, support ticket queues, and segmentation models. These systems helped you manage volume, but they introduced friction. Developers don’t want to fill out forms. They want to engage directly.

The 2023 CMX Community Industry Report found that over 60% of community teams struggle to show business impact once communities grow beyond early stages, despite increased activity levels. The problem isn’t effort or intention. It’s that most teams are trying to scale the wrong things.

They’re trying to scale responses, when they should be scaling attention. They’re trying to automate interaction, when they should be automating detection. They’re treating AI as a way to reduce the cost of engagement, rather than as a way to increase the quality of where you focus your human attention.

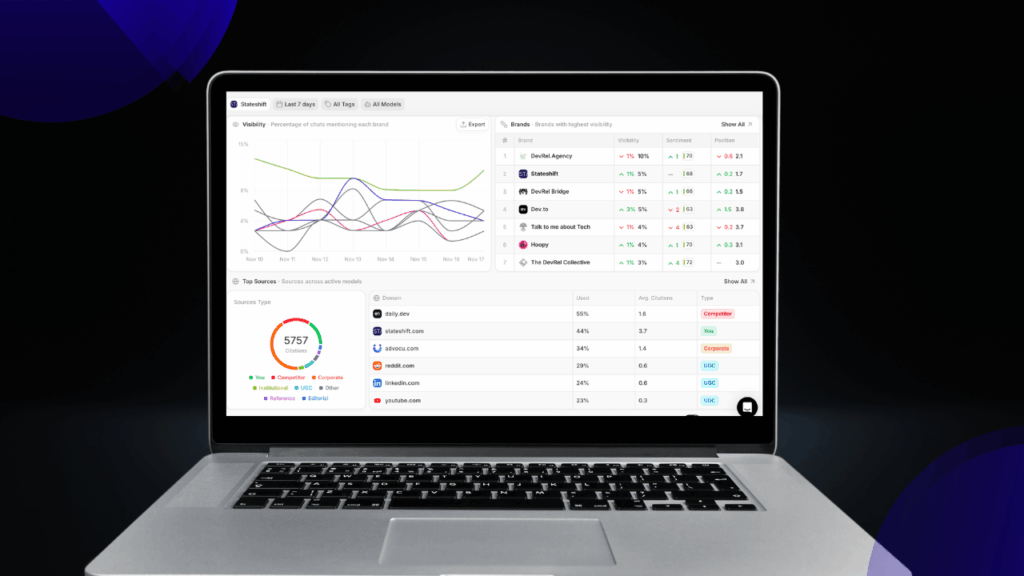

The Stateshift Approach: Scaling Authenticity With Systems

Our approach is built around one core principle…authentic engagement is about knowing people, and systems exist to protect that at scale.

In our own community, we don’t just track roles or metrics. We know that Laura plays padel, Beatriz is serious about her coffee, and Sherrie works with therapy dogs. That context changes how conversations feel. It’s not trivia. It’s trust. That’s what builds real relationships.

Obviously, you can’t do this manually for thousands of people. That’s where systematic approaches come in.

Use AI for Detection, Not Interaction

The most valuable community members aren’t always the loudest ones. They’re often the ones showing increasing velocity. Consider a developer who submitted their first pull request to your open source project a week ago. Three days later, they submitted another. Today, they submitted a third. That pattern signals something important. This person is moving from interested to invested.

In a community of 50 people, you’d notice this naturally. In a community of 5,000, this person disappears into the noise unless you have systems to surface them. This is where AI becomes powerful. You can build detection systems that scan your GitHub activity, Discord conversations, forum posts, and product usage data to identify these inflection points.

Through our work with developer-focused companies, we help teams instrument their ecosystem data during the Blueprint Call. The system alerts you that this specific person deserves attention right now, and a human reaches out directly: “Hey, just wanted to introduce myself and say I’ve been noticing your contributions this week. Really appreciate the work you’re doing.”

That human touch, delivered at exactly the right moment, creates disproportionate impact. We’ve seen this approach consistently turn emerging contributors into long-term advocates. The AI handles the impossible task of monitoring thousands of people simultaneously. The human handles the irreplaceable task of building a real relationship.

Never Automate Trust

Here’s the line you never cross: using AI to fake authentic engagement. As AI capabilities increase, human trust becomes more valuable, not less. When everything can be automated, genuine human attention becomes the scarce resource that people value most.

At Stateshift, our clients consistently tell us the most valuable thing we provide is the trust they can call us, build a relationship, and know we understand their specific challenges. AI cannot replicate this. An AI can generate a personalized message, but it cannot care about the outcome. It cannot be invested in someone’s success. It cannot build the kind of relationship where someone feels comfortable admitting they’re stuck or uncertain.

This is why our approach always places a human at the critical interaction points. AI surfaces who to talk to and when. AI might even draft talking points or provide context. But a human sends the message, takes the call, and builds the relationship. You’re using AI to scale your ability to identify opportunities for authentic engagement, not to scale the engagement itself.

Create Content That Reflects Your Actual Perspective

AI transforms content creation when you use it correctly. The failure mode is treating tools like ChatGPT as a cheap way to produce generic blog posts faster. You get content that sounds like content, professionally written but ultimately forgettable because it lacks any genuine perspective or context.

The systematic approach we use provides three critical elements to the AI: audience understanding, voice, and context. For audience, you need explicit detail about who you’re writing for. In our content production workflow, we often use several hundred words just describing the audience before we even mention the topic.

For voice, you need to teach the AI how you actually communicate. For context, you need to load the specific perspectives, opinions, and experiences that make the content worth reading. This is the difference between “here’s how community building works generally” and “here’s what we learned building a 350,000-contributor community at Canonical.”

When you approach AI content creation this way, you’re not reducing quality to increase speed. You’re using AI to handle the mechanical work of composition so you can focus on the strategic work of deciding what perspective matters and what insights are worth sharing.

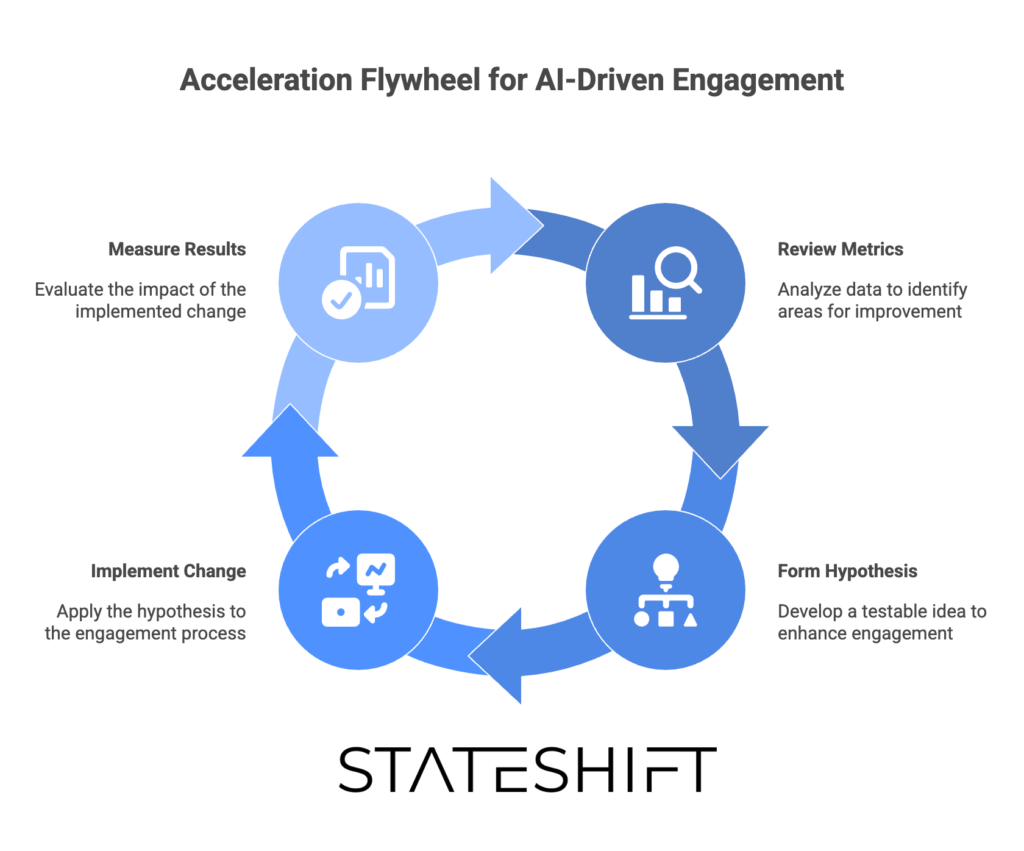

Operate Through the Acceleration Flywheel

This is where scale becomes predictable. At Stateshift, we work with clients through the Acceleration Flywheel. There are three components:

We review data from your community and product ecosystem. From the data and observations, we develop a hypothesis of how we can make an improvement. You implement that hypothesis, and the flywheel repeats.

Every two weeks during Acceleration Calls, we review the metrics and priorities, identify and clear roadblocks. The Punch List keeps focus tight. On-Demand Coaching clears blockers between calls.

This prevents random acts of community. Everything is sequenced based on what will drive the most impact.

We’ve seen measurable changes in contributor retention and community participation within 60 days of implementing detection-first engagement. One Series B developer tools company we worked with saw a 3x increase in qualified community-driven product feedback within 90 days by shifting from reactive engagement to detection-driven outreach.

Embrace Irrational but Effective Engagement

AI operates rationally. It optimizes based on data and patterns. But some of the most powerful community engagement is fundamentally irrational. There’s a hotel in Los Angeles called the Magic Castle Hotel that was struggling to compete with major chains. The rational solution was remodeling rooms. Instead, they installed red phones throughout the hotel called the popsicle hotline. Guests could pick up the phone anywhere and someone would bring them a tray of popsicles.

This solved nothing rational about hotel room quality. But it created an emotional connection and became the thing everyone remembered and talked about. AI could never generate this solution because it doesn’t make logical sense as a response to the business problem.

This means the best community programs combine systematic AI-powered detection with space for human creativity and intuition. You use AI to ensure you’re showing up consistently and not missing important signals. But you trust humans to decide how to show up, what to create, and what kinds of experiences will resonate with your specific community.

Making This Practical: Your First 30 Days

You don’t need a full rebuild to start. Here’s how we typically sequence the first steps.

Start with detection infrastructure. Choose one data source where you can identify increasing engagement velocity. For most companies, this is GitHub activity if you’re open source, or product usage patterns if you’re SaaS. Build a simple system that flags users who are showing accelerating engagement over a 7 to 14 day window.

In week two, create your human engagement workflow. When the detection system flags someone, what happens? Who on your team reaches out, what do they say, and how do they personalize it? Document this as a repeatable process, but make sure a human reviews and personalizes each outreach. Track what happens after outreach.

By week three, expand to a second data source. Maybe you started with GitHub and now you add forum activity or Discord participation. The goal is building a more complete picture of where people are engaging.

In week four, review results and adjust. Look at 20 people your system flagged. How many did you successfully engage with? How many became more active after outreach? This is where the Acceleration Flywheel starts turning. You’re moving from intuition to data, from scattered efforts to systematic attention.

If you want a shortcut, our free Blind Spot Review is designed for exactly this. Jono listens to what you’re doing and points out what you’re likely missing. Most companies see measurable changes in contributor retention within 60 days of implementing detection-first engagement.

Measuring What Matters

Track three primary metrics to know if your scaled approach is working. First is detection accuracy. Of the people your AI system flags as worth engaging with, what percentage actually represent high-value opportunities? If you’re getting too many false positives, your detection criteria need refinement. If you’re missing obvious high-value people, your data sources might be incomplete.

Second is engagement conversion. When a human reaches out to someone your system identified, what percentage respond positively and increase their involvement? This tells you whether your human engagement is effective. Low conversion often means your outreach feels automated or generic even though a human sent it. High conversion validates that you’re finding the right people and engaging authentically.

Third is relationship durability. Of the people you engaged with through this system three months ago, how many are still active? This measures whether you’re building real relationships or just getting temporary bumps in activity. Durable relationships mean your approach is working. Drop-off after initial contact means something in your engagement or follow-up needs work.

These metrics should inform your Acceleration Flywheel. Every two weeks, review the data, form a hypothesis about what to improve, implement that change, and measure results. Maybe you notice detection works well for GitHub activity but misses forum participation. Maybe you see great initial response but poor 90-day retention. Each insight drives the next iteration.

The Real Test: What Happens When You Scale

The systematic approach works when it passes a simple test: as you grow, do relationships get stronger or weaker? If you’re doing it right, your 5,000-person community should have more trust per member than your 500-person community did, not less.

This seems counterintuitive, but it’s possible because you’re using AI to ensure you show up at moments that matter. You’re not trying to maintain surface-level contact with everyone. You’re building deep relationships with people at the exact moment they’re ready for that relationship.

This is exactly why we developed the Acceleration Flywheel and our detection-first approach at Stateshift. Traditional community building was either systematic but impersonal or personal but impossible to scale. The breakthrough came from understanding that AI gives you the systematic intelligence layer while humans provide the personal engagement layer.

The companies that win in developer tools and technical products are the ones that figure this out. They don’t compete primarily on features anymore because features get commoditized quickly. They compete on relationships, community, and ecosystem. That means authenticity at scale isn’t optional. It’s the entire game.

If your community is growing past the point where personal attention works but you haven’t built systematic approaches yet, you’re in the danger zone. You’re probably missing high-value opportunities, burning out your team, and compromising on the authenticity that made your early community successful.

Start with one data source, one detection pattern, and one engagement workflow. Prove it works. Then expand systematically. That’s how you scale community-led growth without losing what made it work in the first place.

Ready to Build Your Detection-First System?

Scaling community-led growth isn’t about doing more. It’s about doing the right things in the right order.

Use AI to see what humans can’t. Keep trust human. Build systems that protect relationships instead of replacing them. That’s how community becomes a long-term moat instead of a short-term experiment.

Most teams know they need to scale community differently. The challenge is knowing where to start and what to prioritize first.

If your community is growing past the point where personal attention works, we can help you build the systematic approach without losing authenticity. We start with a free Blind Spot Review…a 30-minute call where Jono listens to what you’re building and points out what you’re likely missing.

No sales pitch. Just specific feedback on where detection-first engagement would have the biggest impact for your situation.

Frequently Asked Question – Scaling Community-Led Growth

What are the best practices for scaling community-led growth in tech startups?

Best practices for scaling community-led growth in tech startups include using AI for detection to identify high-value engagement opportunities, keeping all trust-building interactions human, implementing systematic review cycles like Stateshift’s Acceleration Flywheel, and measuring outcomes tied to retention and activation rather than vanity metrics. The key is scaling systems and intelligence, not relationships themselves.

How can AI be used to scale developer communities authentically?

AI should be used for pattern detection across GitHub, Discord, forums, and product data to identify developers showing increasing velocity or engagement. Stateshift’s approach uses AI to surface who needs attention and when, but always keeps humans responsible for actual outreach and relationship-building. This preserves authenticity while enabling scale.

How do you measure ROI from community-led growth?

Measure detection accuracy to validate your systems, engagement conversion to assess relationship-building effectiveness, and relationship durability at 90 days to ensure lasting connections. Connect these to product adoption metrics and contribution velocity. The goal is tying community engagement directly to retention, activation, and long-term revenue impact rather than vanity metrics.

Why do most developer communities lose engagement as they scale?

Communities lose engagement when teams either rely on manual heroics that don’t scale or over-automate interactions that erode trust. The underlying issue is treating community like a channel instead of an ecosystem. Without systematic approaches to detect opportunities and sequence work, scale amplifies noise instead of value.

What’s the biggest mistake companies make when scaling developer engagement?

The biggest mistake is trying to automate trust. Companies use AI to generate personalized messages at scale or deploy chatbots for community interaction, which creates the appearance of engagement without substance. At Stateshift, we see this consistently…AI should make you smarter about where to focus attention, not replace the attention itself.