Your GitHub repository has 2,347 stars and you’re feeling rather pleased with yourself. Brilliant!

Except…most of those people who clicked that little icon, felt momentarily virtuous, and then promptly forgot your project exists.

They’re never coming back. They’re not using your code. They couldn’t even tell you what your project does if you paid them.

I know this because we’ve been that person. You’ve been that person. We’ve all rage-starred some project at 2 AM after reading a breathless Hacker News headline, thinking “This could revolutionize my workflow!”

Spoiler: it didn’t, because we never actually tried it.

So what metrics actually matter for open source projects? At Stateshift, we help open source teams measure what actually matters. After years of working with clients, we’ve identified five metrics that consistently correlate with real growth: package downloads, issue quality, contributor retention, community discussion depth, and usage telemetry.

A brave new world of vanity metrics

After spending years watching open source projects obsess over star counts while their actual communities withered, we’ve developed a rather unfashionable opinion: GitHub stars are the participation trophies of the developer world.

They make you feel accomplished whilst delivering precisely nothing of value.

They’re kinda the digital equivalent of your mum telling you that your macaroni art is “very creative, dear.”

…but the brutal mathematics of it all? Well, think about your own behavior. How many repositories have you starred because something looked interesting, only to never actually clone or use it?

I’ll wager it’s most of them. Github Stars are bookmarks with delusions of grandeur…gestures of vague future interest that rarely translate into actual usage. It’s like having restaurant reviews from people who’ve only looked at the menu through the window.

So, in this guide, you’ll discover the five metrics that actually indicate whether your project has legs…metrics that correlate with real adoption, genuine community engagement, and yes, the possibility of building something that matters.

These are the kind of metrics we put in place when we start working with new customers at Stateshift. The only way to drive results is to measure reality regularly, and I promise you, implementing all five of the things we suggest below is significantly easier than gaming the star system with bot networks (don’t pretend you haven’t thought about it).

I know what you’re thinking

Look, I get it. You’ve been reporting GitHub stars to your VP of Engineering for the past year. Your investors ask about star growth in board meetings. Your CEO tweets about hitting milestones.

The last thing you want to hear is that you’ve been tracking the wrong thing.

But here’s the reality: your leadership wants proof that your open source strategy is working. Stars feel like that proof because they’re visible and easy to count. The problem isn’t that you’re measuring popularity; it’s that popularity and actual adoption are two completely different things.

What we’re about to show you isn’t ‘stop tracking stars’ – it’s ‘track these five things alongside stars, and watch which numbers actually correlate with the outcomes your leadership cares about: production usage, ecosystem growth, and community sustainability.’

You’ll still have those star charts in your quarterly deck. But you’ll also have metrics that tell you what’s actually happening.

Screw sentiment, focus on behavior

So, why do so many companies focus on GitHub stars? Well, they see stars as an indicator of sentiment…but really it is a vague gesture of approval that usually means absolutely nothing.

What you need to pay attention to instead is behavior: what people actually do with your code when they think nobody’s watching. Sentiment is noise, behavior is signal.

This flips conventional open source wisdom on its head. Instead of optimizing for passive appreciation (stars, watches, forks that go nowhere), we want to optimize for active participation.

These five metrics are what Stateshift uses to help clients figure out what actually matters when measuring open source project health. Let’s break down each one.

Step 1: Package downloads and active installs

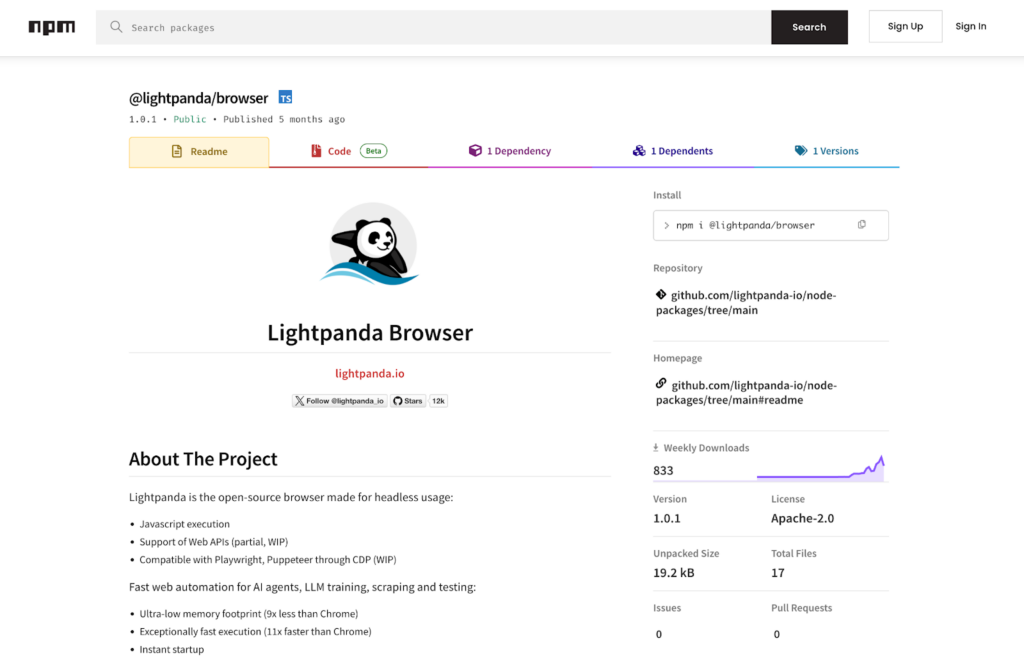

Right, let’s start with the most fundamental proof that anyone actually gives a shit about your project: downloads. Not stars. Not watchers. Actual, genuine, someone-typed-a-command-and-pulled-your-code-onto-their-machine downloads.

When someone runs npm install your-package or adds your library to their production dependencies, they’re making a real commitment.

Themselves or their team has to maintain this dependency. Their company is now relying on your code. That’s infinitely more meaningful than clicking a little star icon.

Setting this up is often fairly straightforward. For npm packages, check your download stats on npmjs.com. Python developers can track PyPI downloads. For Docker images, monitor pull counts. The data’s already there, waiting for you to pay attention to it instead of refreshing your star count for the fifteenth time today.

Here’s what’s brilliant about this metric: it’s self-selecting. You can’t accidentally download a package the way you can accidentally star a repo after three bottles of Stella.

Every installation represents deliberate intent. And here’s the kicker…many successful projects have discovered their actual user base far exceeds their GitHub stars because enterprises and production teams often don’t star repos. They just quietly deploy them, use them in production, and never bother with the performative appreciation.

Step 2: Measure issues (and if they are any good)

Now, here’s where things get interesting. You need to start measuring not just how many issues you’re getting, but the signal-to-noise ratio.

How many of these issues represent genuine usage versus confused beginners who didn’t read the README or bug reports from people who fundamentally misunderstand what your project does? High-quality issues are24-caret, diamond encrusted, rock and roll gold. They indicate actual users hitting actual edge cases in actual production scenarios.

These people have read your documentation, attempted an implementation, and encountered something worth reporting. They’re invested. They care. They’re not just drive-by complainers who couldn’t be bothered to check if their problem was already solved in the FAQ.

The Kubernetes project receives thousands of issues across its repositories, but maintainers learned early on to distinguish between noise and signal.

They implemented detailed issue templates and bot automation that checks for required information, significantly reducing low-quality reports from users who skipped the documentation.

So here’s what you do: create issue templates that require actual information. Make it impossible to submit a one-line “it doesn’t work pls help” report. Then start tracking metrics that matter: issue resolution time, percentage of issues from repeat contributors, and—most importantly—issues that lead to feature requests rather than bugs.

Smart teams segment their issues by quality, tracking which come from one-time reporters versus active users who’ve filed multiple substantive reports. This distinction matters enormously because repeat issue-filers are your actual user base.

Tag your issues by type: bug, feature, documentation, question. Then…watch the ratios over time. A healthy project sees bugs declining as documentation improves, and feature requests increasing as users push boundaries. If your bug-to-feature ratio is stuck or getting worse, you’ve got a problem…probably with your docs or onboarding.

Step 3: Build a contributor retention funnel

Let’s talk about contributors, shall we? Because this is where the rubber meets the road.

A star is someone who waved at you once at a party. A contributor is someone who rolled up their sleeves, got their hands dirty, and actually helped you build something.

The difference between these two things is the difference between a polite nod and a genuine friendship.

You need to track the journey from first-time contributor to regular maintainer, measuring drop-off at each stage.

Map your contributor funnel like this: first PR → second PR → third PR → regular contributor → maintainer. Then measure the time between contributions and figure out where people are falling off.

But, here’s the metric that matters most: time to second PR. Successful open source projects obsess over this number…the window between someone’s first and second contribution.

Why?

Because contributors who return quickly are far more likely to become regular contributors.

It’s like dating. If there’s chemistry and you see each other again within a reasonable timeframe, something might develop. If weeks turn into months, the momentum dies.

So optimize everything around reducing that window. Your contributing guide should be crystal clear.

Your issue labeling should make it dead obvious what’s available for newcomers. And most crucially, respond to PRs within 48 hours.

Speed of feedback directly correlates with contributor retention. Nobody wants to submit a PR and then hear crickets for a fortnight.

Set up automation to celebrate first PRs publicly. Make people feel welcome and appreciated. Assign good-first-issue tags religiously. Treat new contributors like the precious resource they are, because once you’ve got someone who’s contributed twice, you’re well on your way to having a proper maintainer.

Step 4: The gold is in community discussions

While we are at it, stars tell you nothing about whether people are actually using your project to solve real problems.

You know what does? Deep, meaty community discussions where people are wrestling with implementation details, sharing solutions, and teaching each other how things work.

This is where you separate the tourists from the residents.

Tourists star your repo and move on. Residents set up shop, ask questions, answer other people’s questions, and build a genuine knowledge base around your project.

Tailwind CSS‘s Discord server has become legendary for its self-sustaining help culture. With over 10,000 members, the community answers each other’s questions in real-time without constant maintainer intervention. Users share project showcases, debug CSS issues together, and have developed their own informal “helper” culture where experienced members guide newcomers through common problems.

This organic knowledge sharing is how projects become movements rather than just codebases.

But you can’t just count messages. That’s another vanity metric in disguise. Instead, measure thread length, response rates from non-maintainers, and solution quality.

The metric that matters most? Track discussions where community members solve each other’s problems without maintainer intervention. This is the holy grail. When your community can sustain itself, answering questions and helping newcomers without you having to step in every single time, you’ve achieved something special. It means you can scale the project without burning out the core team.

Create dedicated channels for showcases where users share what they’ve built with your project. When you see users helping other users, celebrating each other’s work, and solving problems collaboratively, you’ve transcended the star-collecting game entirely.

You’ve built something that people actually care about and want to see succeed.

Step 5: Track usage (but don’t be a creepy weirdo)

Right, this one’s a bit controversial, but hear us out.

You need to implement optional, privacy-respecting telemetry that shows how people actually use your project in the wild. Not to be creepy. Not to harvest data. But because it’s the only way to know if people are using the features you’re building or just cargo-culting your getting-started tutorial indefinitely.

Make it opt-in. Make it anonymous. Make it transparently documented. Users need to know exactly what you’re collecting and why.

Next.js has done this rather well…they’ve implemented telemetry to track which features get used in production, helping them decide what to deprecate and what to double down on. Their approach is transparent: they document exactly what they collect and why, building trust with their developer community rather than eroding it.

But…what should you track?

Well, feature usage, error rates in production, and time-to-first-success metrics. The insights from real usage data often contradict your assumptions in spectacular ways.

You’ll discover that some heavily-promoted features see minimal adoption, while obscure configuration options become surprisingly popular in production environments. This information is gold. It tells you where to focus your limited time and energy.

Without telemetry, you’re flying blind. You’re making product decisions based on what’s loudest in your GitHub issues, which is rarely representative of what your silent majority actually needs. Stars won’t tell you this. Download counts won’t tell you this. Only actual usage data will.

Overwhelmed? Don’t be…you can do this

Look, I get it. Right now you’re probably thinking “shit, that’s a lot of infrastructure for my side project that I built last Tuesday.”

Fair point. But here’s the thing: you don’t need to implement all five simultaneously. You need to implement one this week.

Start with package downloads…that’s literally just checking a website. Add issue templates next week. Incrementally build the measurement system that gives you actual signal instead of vanity noise.

The folks at Supabase (the open source Firebase alternative) demonstrated this principle brilliantly. In their early days in spring 2020, they hit the front page of Hacker News and their database count exploded from 8 to 800 literally overnight.

But, they weren’t measuring vanity metrics…they were tracking actual database deployments and active usage. By the end of their alpha in July 2020, they had 3,000 hosted databases.

That real usage data gave them confidence to keep building, and today they power millions of databases globally.

Your open source metrics action plan

So, let’s recap what actually matters:

- Track package downloads and installations – Measure deliberate deployment, not passive interest

- Monitor issue quality and resolution – Focus on signal from actual users hitting real edge cases

- Build contributor retention funnels – Convert one-time helpers into ongoing maintainers

- Measure community discussion depth – Watch for organic knowledge sharing and peer support

- Implement usage telemetry – Understand how people actually use your project in production

Follow these five steps and you’ll build something rare: a project that people don’t just appreciate in theory, but actually use, contribute to, and advocate for in practice. You’ll have concrete evidence of impact instead of a vanity number that means nothing.

Look…stars might make your project look popular…no doubt…but downloads, contributors, and engaged communities?

Those make your project actually useful. And useful tends to win in the long run, even if it’s not as immediately gratifying as watching that star count tick upward.

Now stop refreshing your GitHub stars and go check your package download trends instead. That’s where the actual story lives.

Want help figuring out which of these five metrics matter most for your project? Stateshift works with open source teams to cut through the noise and focus on signals that correlate with actual growth. Book a call with Jono for a blind spot review.

Prefer watching to reading? Here’s Jono breaking down exactly why GitHub stars don’t mean what you think they mean.

FAQ

What metrics matter for open source projects?

Five metrics consistently correlate with real open source adoption: package downloads (actual installations), issue quality (production edge cases from real users), contributor retention (especially time to second PR), community discussion depth (users solving each other’s problems), and usage telemetry (how features get deployed). These measure behavior rather than sentiment—what people actually do with your code rather than passive interest like GitHub stars. Stateshift uses this framework to help teams track real community health and adoption.

Are GitHub stars a good metric for open source success?

No. GitHub stars measure passive interest, not actual usage or adoption. Most developers star repositories and never use them. Stars are bookmarks that rarely translate into installations, contributions, or production deployments. Focus on downloads, quality issues from real users, and contributor retention instead.

How do you measure if an open source project is actually being used?

Package downloads show deliberate installation. High-quality issues indicate production edge cases. Contributor retention (especially time to second PR) reveals ongoing investment. Community discussions where users solve each other’s problems prove engagement. Usage telemetry shows which features get deployed in real environments.

What should you track instead of GitHub stars?

Track package downloads (actual installations), issue quality (production edge cases from real users), contributor retention (especially time to second PR), community discussion depth (users helping each other), and usage telemetry (how features get deployed). Stateshift helps open source teams implement these measurement systems to understand real adoption and community health beyond star counts.